Ideally, an effective benchmark for VL-GenRMs should satisfy three key requirements:

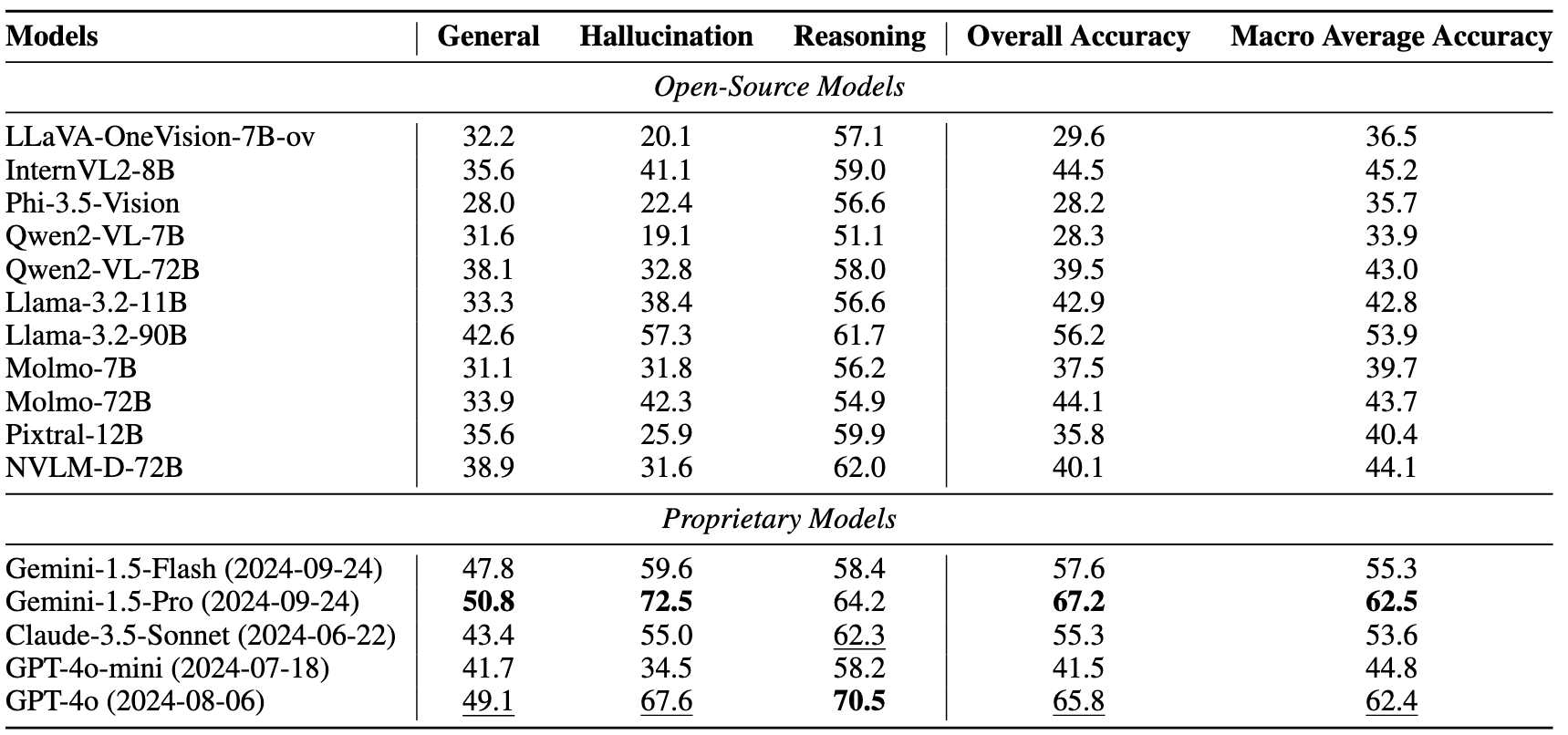

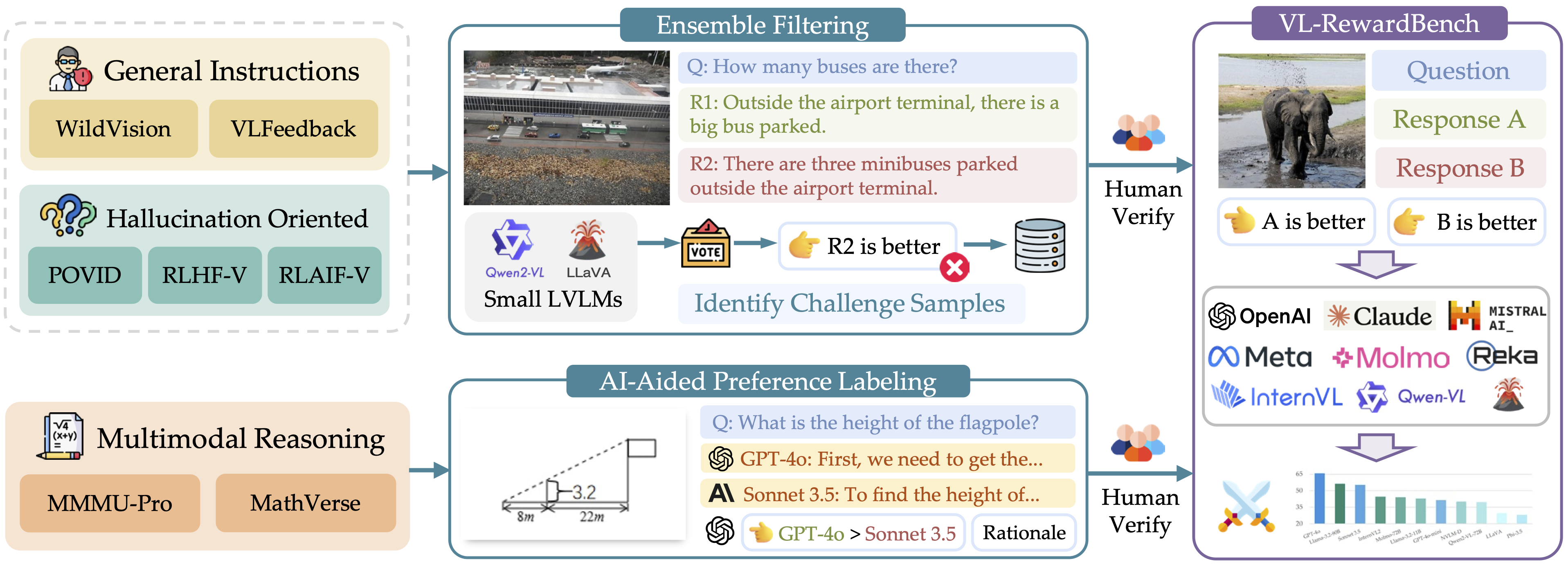

To satisfy criterion (a), our benchmark evaluates VL-GenRMs across three key application domains:

To ensure criterion (b), we employ targeted curation strategies:

- For source datasets with preference pairs, we employ small LVLMs collaboratively to filter out challenging samples, which our evaluation shows remain difficult even for much larger models.

- For reasoning tasks without annotated labels, we leverage strong commercial models to generate responses with explicit reasoning paths, followed by GPT-4's quality assessment.

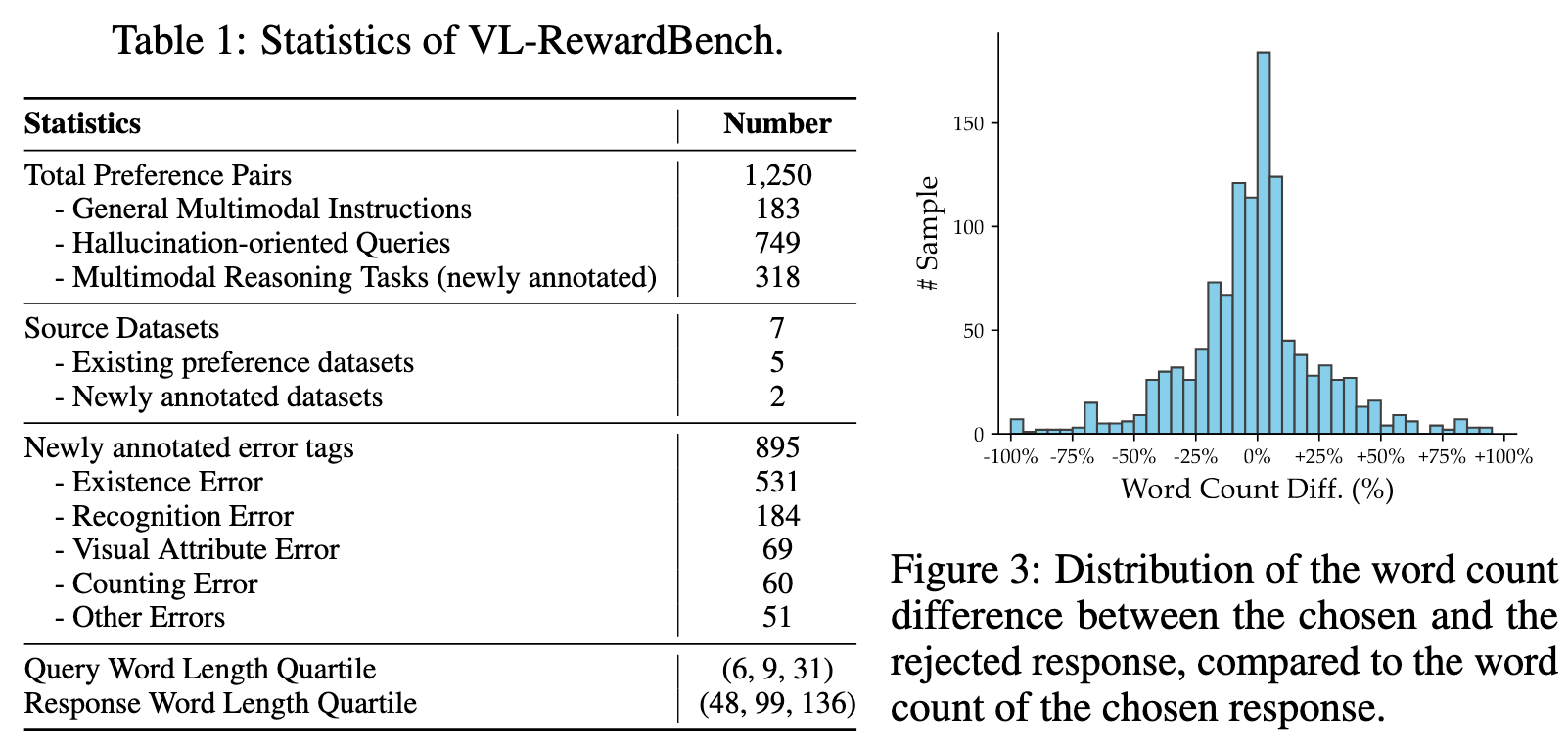

VL-RewardBench Statistics